线上问题概述

某天下午,客服反馈项目中部分人的功能不能使用,部分人的可以正常使用。经排查,发现有一台服务器接受请求后不处理,dump了堆栈信息和线程的信息后,重启了服务然后一切正常。

排查问题思路

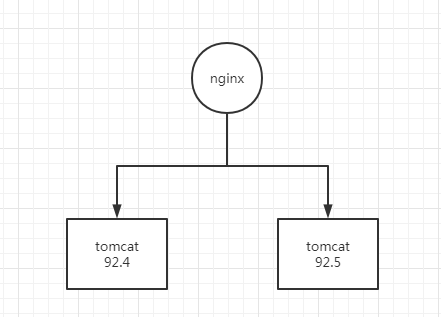

- 线上系统架构采用的是nginx负载均衡到两个节点的服务器上,此次是其中一台服务器(92.4)出问题

-

首先查看堆栈信息,没有发现oom报错。然后项目较老使用的是CMS(ConcurrentMarkSweep)垃圾收集器,下面是堆栈信息,可以看出JVM各个区也都正常

Heap Configuration: MinHeapFreeRatio = 40 MaxHeapFreeRatio = 70 MaxHeapSize = 2097152000 (2000.0MB) NewSize = 524288000 (500.0MB) MaxNewSize = 524288000 (500.0MB) OldSize = 5439488 (5.1875MB) NewRatio = 2 SurvivorRatio = 1 PermSize = 262144000 (250.0MB) MaxPermSize = 262144000 (250.0MB) Heap Usage: New Generation (Eden + 1 Survivor Space): capacity = 349569024 (333.375MB) used = 125871224 (120.04015350341797MB) free = 223697800 (213.33484649658203MB) 36.007545107886905% used Eden Space: capacity = 174850048 (166.75MB) used = 16912984 (16.129478454589844MB) free = 157937064 (150.62052154541016MB) 9.672850647430192% used From Space: capacity = 174718976 (166.625MB) used = 108958240 (103.91067504882812MB) free = 65760736 (62.714324951171875MB) 62.36199552817892% used To Space: capacity = 174718976 (166.625MB) used = 0 (0.0MB) free = 174718976 (166.625MB) 0.0% used concurrent mark-sweep generation: capacity = 1572864000 (1500.0MB) used = 604906696 (576.8839797973633MB) free = 967957304 (923.1160202026367MB) 38.45893198649089% used Perm Generation: capacity = 262144000 (250.0MB) used = 174978296 (166.87230682373047MB) free = 87165704 (83.12769317626953MB) 66.7489227294922% used -

查看线程问题,发现有报错,信息如下,发现有个线程在链接数据库查询的时候报错了,线程显示在WAITTING,所以应该是查询数据库的时候遇到问题了,大概问题定位到是数据库连接出问题,也就能解释的通为什么另一个节点的tomcat是正常的。

2020-04-29 16:48 cpu_busy: 59 "http-8080-287" daemon prio=10 tid=0x00007fbf5b690800 nid=0x62c1 in Object.wait() [0x00007fbef9b51000] java.lang.Thread.State: WAITING (on object monitor) at java.lang.Object.wait(Native Method) - waiting on <0x00000007947ec8d8> (a com.mchange.v2.resourcepool.BasicResourcePool) at com.mchange.v2.resourcepool.BasicResourcePool.awaitAvailable(BasicResourcePool.java:1402) at com.mchange.v2.resourcepool.BasicResourcePool.prelimCheckoutResource(BasicResourcePool.java:594) - locked <0x00000007947ec8d8> (a com.mchange.v2.resourcepool.BasicResourcePool) at com.mchange.v2.resourcepool.BasicResourcePool.checkoutResource(BasicResourcePool.java:514) at com.mchange.v2.c3p0.impl.C3P0PooledConnectionPool.checkoutAndMarkConnectionInUse(C3P0PooledConnectionPool.java:707) at com.mchange.v2.c3p0.impl.C3P0PooledConnectionPool.checkoutPooledConnection(C3P0PooledConnectionPool.java:634) at com.mchange.v2.c3p0.impl.AbstractPoolBackedDataSource.getConnection(AbstractPoolBackedDataSource.java:128) at org.springframework.orm.hibernate3.LocalDataSourceConnectionProvider.getConnection(LocalDataSourceConnectionProvider.java:81) at org.hibernate.jdbc.ConnectionManager.openConnection(ConnectionManager.java:446) at org.hibernate.jdbc.ConnectionManager.getConnection(ConnectionManager.java:167) at org.hibernate.jdbc.AbstractBatcher.prepareQueryStatement(AbstractBatcher.java:161) at org.hibernate.loader.Loader.prepareQueryStatement(Loader.java:1700) at org.hibernate.loader.Loader.doQuery(Loader.java:801) at org.hibernate.loader.Loader.doQueryAndInitializeNonLazyCollections(Loader.java:274) at org.hibernate.loader.Loader.doList(Loader.java:2542) at org.hibernate.loader.Loader.listIgnoreQueryCache(Loader.java:2276) at org.hibernate.loader.Loader.list(Loader.java:2271) at org.hibernate.loader.custom.CustomLoader.list(CustomLoader.java:316) at org.hibernate.impl.SessionImpl.listCustomQuery(SessionImpl.java:1842) at org.hibernate.impl.AbstractSessionImpl.list(AbstractSessionImpl.java:165) at org.hibernate.impl.SQLQueryImpl.list(SQLQueryImpl.java:157) at com.gnetis.roadshow.dao.GnetRsConferenceDAO.queryConferenceTotalNumBySQL(GnetRsConferenceDAO.java:659) at com.gnetis.roadshow.conference.confManage.service.impl.ConferenceSearchManage.queryConferenceTotalNumBySQL(ConferenceSearchManage.java:770) at com.gnetis.roadshow.conference.confManage.service.impl.ConferenceSearchManage.selecteAcceptanceConference(ConferenceSearchManage.java:474) at sun.reflect.GeneratedMethodAccessor386.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:616)-

首先进入DB的数据库连接数监控页面查看数据库连接是否正常,发现连接数有点大,线上使用了数据库连接池,每台机器最大连接池数为50,所以总的连接数最大是100,但是下面当时故障时间点的连接数是90个,猜想可能是92.4这台机器的连接数满了导致请求不能处理,然后进入mysql,使用show processlist查看运行的线程,只查看其中92.4连接的线程,发现92.4的连接很多而且很多的state是sleep而且time的时间很大,大概有十几天。

我从中挑了一个,反推或者这个连接的时间,然后用时间去查日志,发现92.4里面当时有个定时任务在执行时报错了。通过代码和业务分析后发现,是由于疫情期间参会人数暴增,代码中参会人的属性是short类型,导致参数人强制类型转换后变成负数,导致定时任务报错。hibernate中旧版本有bug报错后没有释放连接,导致连接池被耗完,后面的请求获取不到连接一直在等待。

-

经验总结

- 首先就是做好日志中错误信息的监控,对所有报错都及时处理

- 增加数据库连接池的最大连接数,增加单台机器的数据库连接监控并及时告警